Automated testing is a big part of modern software development, and Selenium is one of the most popular tools for browser automation. With this tool, developers and testers can mimic real user activities to find bugs. Nevertheless, test flakiness poses a significant challenge to teams that use Selenium. This can be especially frustrating when running large suites of tests, like those used in JUnit testing, where stability and reliability are essential.

In the blog, we’ll describe what test flakiness is, why it happens, but more importantly, how to fix or eliminate it. The methods we will share are useful for both novice and experienced quality assurance and testers because they will help improve the stability and confidence when running your Selenium test suite.

What is Test Flakiness?

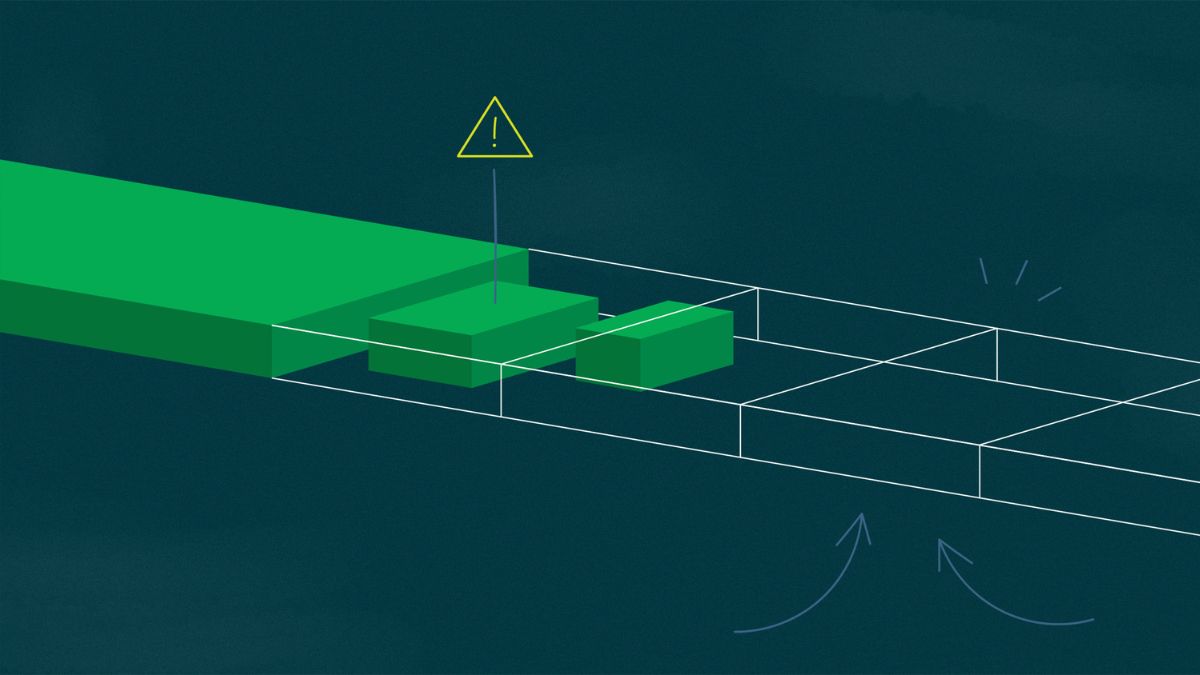

Test flakiness means inconsistency. A flaky test might fail one minute and pass the next without any code changes. This leads to confusion, wasted time, and loss of trust in the test results.

Flaky tests can be misleading. They make it harder to tell if a failure is due to a real bug or just a problem with the test itself. Over time, flaky tests can be ignored by the team, which increases the risk of bugs slipping into production.

Common Causes of Test Flakiness in Selenium

Below are reasons why Selenium tests turn out flaky most time:

Timing Issues

Failure on an element that has not yet been displayed often arises from wrong timing. Websites typically contain elements whose availability is postponed due to animations, loading screens, or AJAX requests.

Dynamic Content

The increasing trend is that web apps have complex dynamics. Content changes depending on user actions or data loads from servers. If a test expects a certain element of content to appear but it doesn’t load in time, it will fail.

Poorly Written Test Scripts

Some tests are written without proper planning or understanding of how the application works. With slight modifications made to the UI or flow of the application, these tests surely fail.

Dependency on External Systems

Flaky behavior is more common for tests that rely on third-party systems like payment gateways or APIs. Any failure in case the external service goes down or becomes slow can cause the failure of such a test too.

- Environmental variances: Compatibility issues like different browser versions, operating systems or even monitor sizes may cause a test to fail in the CI/CD pipeline that passed locally.

- Bandwidth delays: Poorly performing networks with low connectivity may cause problems when trying to run tests, especially on remote test lab.

Methods for Reducing Selenium Test Inconsistency

With this information in mind about the usual reasons, let us now look at some approaches for repairing and avoiding flaky tests.

Use explicit wait instead of implicit wait

Instead of guessing the time a particular element might take to load, employ explicit waits, which instruct Selenium to pause until a certain condition is met. For instance, wait for a moment until you can see what you are clicking on. This will make your tests stronger and more dependable.

Do Not Include Hard-Coded Sleep Statements

Thread.sleep() or similar methods appear to offer a simple solution, but they increase test fragility and slow it down. The wait fails if it is too brief and takes long if it is too long. Rather than that, one should employ intelligent forms of waiting, such as expected conditions.

Create clean and maintainable scripts for testing

Observe these guidelines while creating your test scripts:

- Ensure that you have used clear names.

- Concentrate on one aspect in every individual test.

- Use helper functions to repeat common actions.

- Do not write redundant code.

It becomes simpler to identify why a given section of the test has failed.

Execute Tests in Reliable Environments

Employ environments closely resembling production ones, i.e., configure similar browsers, operating systems, and network situations as those likely to be encountered in real-world environments.

Even better would be using a cloud-based testing platform for actual devices and browsers in the cloud, where one can carry out tests across many configurations without having environment-related problems.

Make Use of Mocks for External Services

When your tests rely on third-party services, use mocks or stubs to simulate their behavior and avoid unexpected failures. In this way, a failing service does not make your test fail. It also gives room for you to mock different conditions, like unsuccessful payments or delays.

Flaky tests—those that unpredictably pass or fail without changes to the code—are a common challenge in Selenium automation. They can erode confidence in test results and slow down development cycles. LambdaTest offers a suite of tools specifically designed to identify, manage, and reduce test flakiness, enhancing the reliability of your Selenium test suites.

LambdaTest’s AI-native Test Intelligence platform employs algorithms to detect flaky tests by analyzing patterns in test executions. It identifies tests that exhibit inconsistent behavior across runs, providing insights into potential causes such as environmental factors or unstable test scripts.

The Auto-Healing feature further enhances test stability by automatically addressing common issues that lead to flakiness, such as changes in web element locators due to UI updates. Additionally, LambdaTest provides detailed analytics on flaky tests, including historical data on test performance and failure patterns, helping teams prioritize and address the most problematic tests effectively.

Retry Logic Should Be Used with Care

There are some testing frameworks that provide an option of retrying failed tests automatically. Although this may be useful for tests that are truly unreliable, it is important to be cautious about depending on it too much. Retrying a test does not solve the real issue behind it—it only hides the problem for a while.

Long and Failing Tests vs Short and Focused Tests

If the long tests fail to cover multiple steps, then they are highly likely not going to work out. Divide them into shorter, focused tests that analyze individual characteristics. It becomes easier to identify errors that way and increases the chance of flakiness.

Best Practices for Managing Test Flakiness

Apart from fixing unpredictable tests, there is a need to control them as well throughout.

Keep an Eye on Unreliable Tests

Employ your testing framework or CI system to indicate and track inconsistent tests. Some tools provide the option of marking certain tests as “flaky” so that they do not prevent rollouts while still indicating their condition.

Look Into Failed Tests Even If They Passed After A Retry

Create a procedure for going through all failed tests regardless of whether they were successful upon retest. Spot recurring trends and implement solutions at the root cause level. Test failures should never be disregarded just because they seem unreliable.

Work with Others Outside Your Team

Quality assurance does not solely deal with flakiness. Programmers, quality analysts, as well as DevOps personnel also have a role in enhancing the dependability of these tests. Promote honest conversation whenever anyone identifies an unreliable test case.

Ensure That Tests Are Standalone

Each test must execute by itself and not rely on another test’s outcome. When one test changes a shared state, such as updating a user account that is used elsewhere, it may cause unforeseen issues.

Parallel Testing Should Be Done with Caution

Running tests concurrently speeds up their execution but may also cause more unstable results if they interact wrongly. Confirm that every test uses separate data and sessions.

Keep an Eye on Your Test Infrastructure Health

Keeping an eye on the health of your test infrastructure, such as CI/CD pipelines, virtual machines (VMs), and test environments, is prudent. Overstraining the system or having a server go down may add to the flakiness. By keeping track of infrastructure health, you can identify and address these issues before they impact test results.

Refactor Test Suites Periodically

When you change your application, you should change your tests. Making sure you refactor your tests periodically will ensure your test suite matches the latest functionalities of your application. Tests that are stale may become irrelevant or fail altogether due to changes in the application’s functionality, which could be another cause of test flakiness.

Use Selenium Grid

Running tests will often mean running the test in several browsers and on several platforms. If you can leverage Selenium Grid to distribute the load, you will reduce the likelihood of flaky tests caused by environmental shifts and will increase the reliability of your test runs.

Incorporate Reporting and Analytics

Using reporting or analytics has value as it can provide a review of your test reports, test analytics, and overall test trends. These reports will help identify flaky tests sooner rather than later. If you can identify flaky tests and fix them sooner rather than later, you can keep your test suite running smoothly.

Conclusion

Test flakiness with Selenium is a common issue faced by all teams, but with the right approach, your team can learn to control it. There will always be some instability in any test suite, but being aware of the potential reasons for flakiness—timing issues, dynamic and conditional content, variations between environments, etc.—will help you reduce or eliminate it.

There are various testing strategies to help reduce flakiness—like using explicit waits instead of hard waits and being intentional about writing organized, maintainable test suites. Many of these strategies attempt to improve your current test scripts to become more stable and consistent.

Controlling test flakiness is not only about fixing the broken tests. Instead, it serves as an avenue to improve upon the culture of quality and reliability within your development and QA teams. Working together, continuous improvement, and attention to detail will create a quality automated test framework that enables your team to produce software faster (release more often) with lower risk and a greater level of confidence every time you deploy.